AI and Exoskeletons Team Up to Transform Human Performance on Earth and in Space

Researchers have developed an AI-enhanced controller for exoskeletons that learns to support various movements such as walking and running without individual calibration. This system reduces energy expenditure significantly, making it a promising tool for enhancing human mobility efficiently.

A new AI controller for exoskeletons, capable of learning different human movements without specific programming, has demonstrated substantial energy savings, marking a major step forward in wearable robot technology.

Imagine safer, more efficient movements for factory workers and astronauts, as well as improved mobility for people with disabilities. It could someday become a more widespread reality, thanks to new research published on June 12 in the journal Nature.

Called “exoskeletons,” wearable robotic frameworks for the human body promise easier movement, but technological hurdles have limited their broader application, explained Dr. Shuzhen Luo of Embry-Riddle Aeronautical University — first author of the Nature paper, with corresponding author Dr. Hao Su of North Carolina State University (NC State) and other colleagues.

To date, exoskeletons must be pre-programmed for specific activities and individuals, based on lengthy, costly, labor-intensive tests with human subjects, Luo noted.

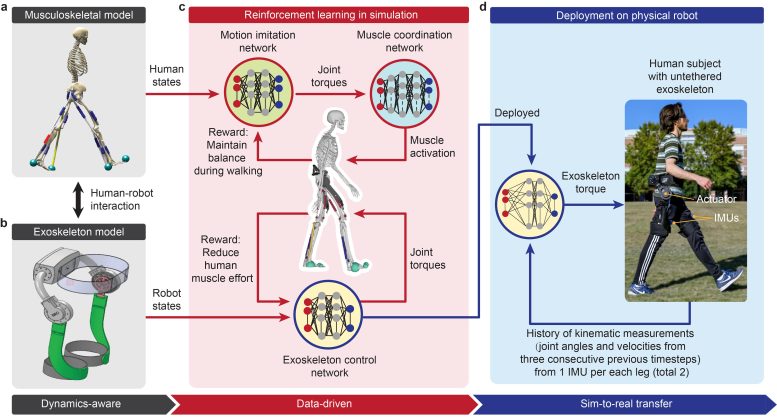

Researchers developed a full-body musculoskeletal human model consisting of 208 muscles (upper left), as well as a custom hip exoskeleton (lower left), then leveraged artificial intelligence to simulate multiple activities (center) before deploying the learned controller on human subjects. Credit: Nature, Luo et al., Figure 2.

Introducing AI-Powered Control

Now, researchers have described a super smart or “learned” controller that leverages data-intensive artificial intelligence (AI) and computer simulations to train portable, robotic exoskeletons.

“This new controller provides smooth, continuous torque assistance for walking, running, or climbing stairs without the need for any human-involved testing,” Luo reported. “With only one run on a graphics processing unit, we can train a control law or `policy,’ in simulation, so that the controller can effectively assist all three activities and various individuals.”

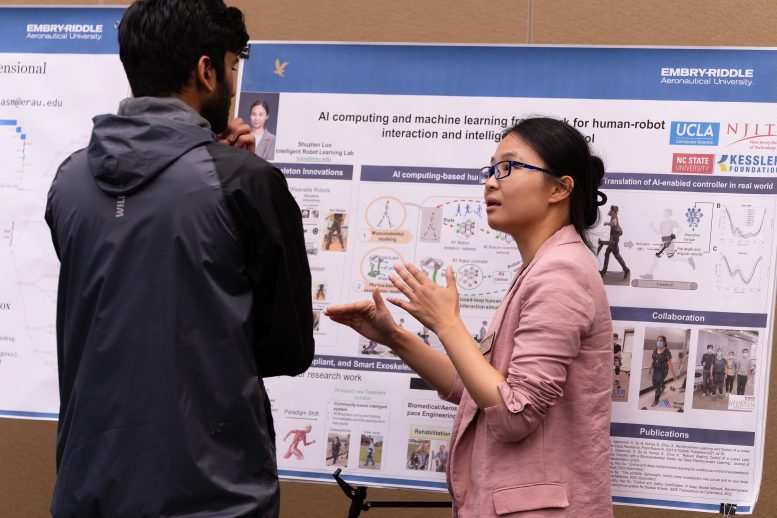

Dr. Shuzhen Luo of Embry-Riddle Aeronautical University (at right), whose work appears in “Nature” on June 12, 2024, discusses her research related to AI-powered robotic exoskeletons, during an internal poster presentation. Credit: Embry-Riddle/David Massey

Revolutionary Energy Reductions

Driven by three interconnected, multi-layered neural networks, the controller learns as it goes — evolving through “millions of epochs of musculoskeletal simulation to improve human mobility,” explained Dr. Luo, assistant professor of Mechanical Engineering at Embry-Riddle’s Daytona Beach, Florida, campus.

The experiment-free, “learning-in-simulation” framework, deployed on a custom hip exoskeleton, generated what appears to be the highest metabolic rate reductions of portable hip exoskeletons to date — with an average of 24.3%, 13.1%, and 15.4% reduced energy expenditure by wearers, for walking, running and stair-climbing, respectively.

These energy reduction rates were calculated by comparing the performance of human subjects both with and without the robotic exoskeleton, Su of NC State explained. “That means it’s a true measure of how much energy the exoskeleton is saving,” said Su, associate professor of Mechanical and Aerospace Engineering. “This work is essentially making science fiction reality — allowing people to burn less energy while conducting a variety of tasks.”

Bridging the Simulation-to-Reality Gap

The approach is believed to be the first to demonstrate the feasibility of developing controllers, in simulation, that bridge the so-called simulation-to-reality, or “sim2real gap” while significantly improving human performance.

“Previous achievements in reinforcement learning have tended to focus primarily on simulation and board games,” Luo said, “whereas we proposed a new method — namely, a dynamic-aware, data-driven reinforcement learning way to train and control wearable robots to directly benefit humans.”

The framework “may offer a generalizable and scalable strategy for the rapid, widespread deployment of a variety of assistive robots for both able-bodied and mobility-impaired individuals,” added Su.

Overcoming Technological Obstacles

As noted, exoskeletons have traditionally required handcrafted control laws based on time-consuming human tests to handle each activity and account for differences in individual gaits, researchers explained in the journal “Nature.” A learning-in-simulation approach suggested a possible solution to those obstacles.

The resulting “dynamics-aware, data-driven reinforcement learning approach” dramatically expedites the development of exoskeletons for real-world adoption, Luo said. The closed-loop simulation incorporates both exoskeleton controller and physics models of musculoskeletal dynamics, human-robot interaction and muscle reactions to generate efficient and realistic data. In this way, a control policy can evolve or learn in simulation.

“Our method provides a foundation for turnkey solutions in controller development for wearable robots,” Luo said.

Future Directions in Exoskeleton Research

Future research will focus on unique gaits, for walking, running or stair climbing, to help people who have disabilities such as stroke, osteoarthritis, and cerebral palsy as well as those with amputations.

For more on this research, see Robotic Suits That Use AI to Help You Run Easier and Faster.

Reference: “Experiment-free exoskeleton assistance via learning in simulation” by Shuzhen Luo, Menghan Jiang, Sainan Zhang, Junxi Zhu, Shuangyue Yu, Israel Dominguez Silva, Tian Wang, Elliott Rouse, Bolei Zhou, Hyunwoo Yuk, Xianlian Zhou and Hao Su, 12 June 2024, Nature.

DOI: 10.1038/s41586-024-07382-4

The Nature paper was authored by Shuzhen Luo of Embry-Riddle Aeronautical University, with Menghan Jiang, Sainan Zhang, Junxi Zhu, Shuangyue Yu, Israel Dominguez Silva and Tian Wang of North Carolina State University; and corresponding author Hao Su of North Carolina State University and the University of North Carolina at Chapel Hill; Elliott Rouse of the University of Michigan, Ann Arbor; Bolei Zhou of the University of California, Los Angeles; Hyunwoo Yuk of the Korea Advanced Institute of Science and Technology; and Xianlian Zhou of the New Jersey Institute of Technology.

Yufeng Kevin Chen of the Massachusetts Institute of Technology provided constructive feedback in support of the paper, “Experiment-free exoskeleton assistance via learning in simulation.”

The research was supported in part by a National Science Foundation (NSF) CAREER award (CMMI 1944655); the National Institute on Disability, Independent Living and Rehabilitation Research (DRRP 90DPGE0019); a Switzer Research Distinguished Fellow (SFGE22000372); the NSF Future of Work (2026622); and the National Institutes of Health (1R01EB035404).

In keeping with “Nature’s” publication policies, any potential “competing interests” were disclosed in the paper. Su and Luo, a former postdoctoral researcher at NC State who is now on the faculty at Embry-Riddle, are co-inventors on intellectual property related to the controller described here.